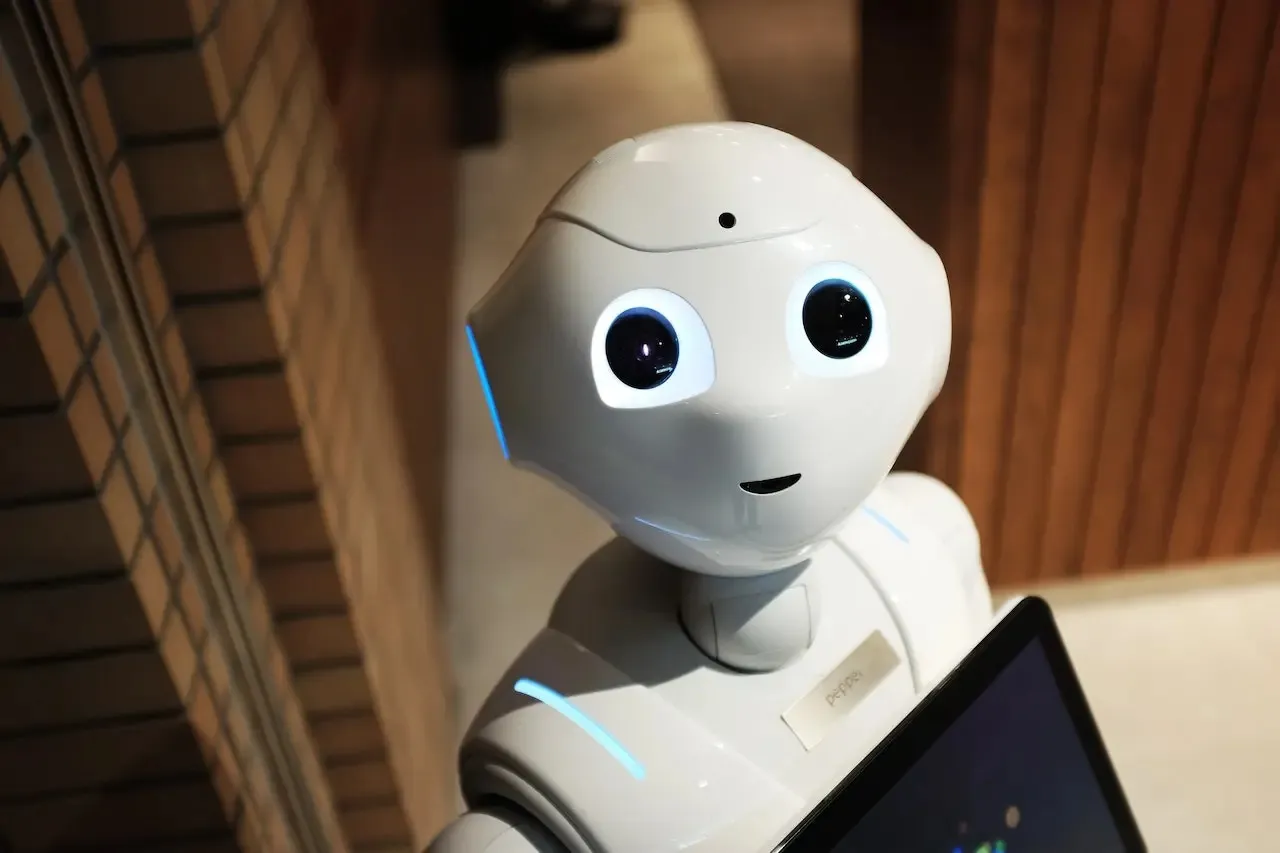

Engineers aim to give robots a bit of common sense when faced with situations that push them off their trained path, so they can self-correct after missteps and carry on with their chores. The team’s method connects robot motion data with the common sense knowledge of large language models, or LLMs.